I started with stepping to 100 by 5 originally to narrow down the window and then stepped by 2 when finishing. You can play with these values to see how yours change. Some corpus might have hundreds of topics smaller ones will have few. Next, we'll create some parameters for stepping through the range of topics we want to test. get_coherence ()) return coherence_values We'll start by importing the necessary libraries and loading our data.ĭef compute_coherence_values ( dictionary, corpus, texts, cohere, limit, start = 2, step = 2 ): coherence_values = for num_topics in range ( start, limit, step ): model = LdaModel ( corpus = corpus, id2word = dictionary, num_topics = num_topics, chunksize = chunksize, alpha = 'auto', eta = 'auto', iterations = iterations, passes = passes, eval_every = eval_every, random_state = 42 ,) coherencemodel = CoherenceModel ( model = model, texts = texts, dictionary = dictionary, coherence = cohere ) coherence_values. I opted to use Lemmatization over Stemming since words tend to be more interpretable when brought to their root or lemma versus being chopped off with stemming. We applied the methods from this article directly to the data.

#SKLEARN LDA COHERENCE SCORE SERIES#

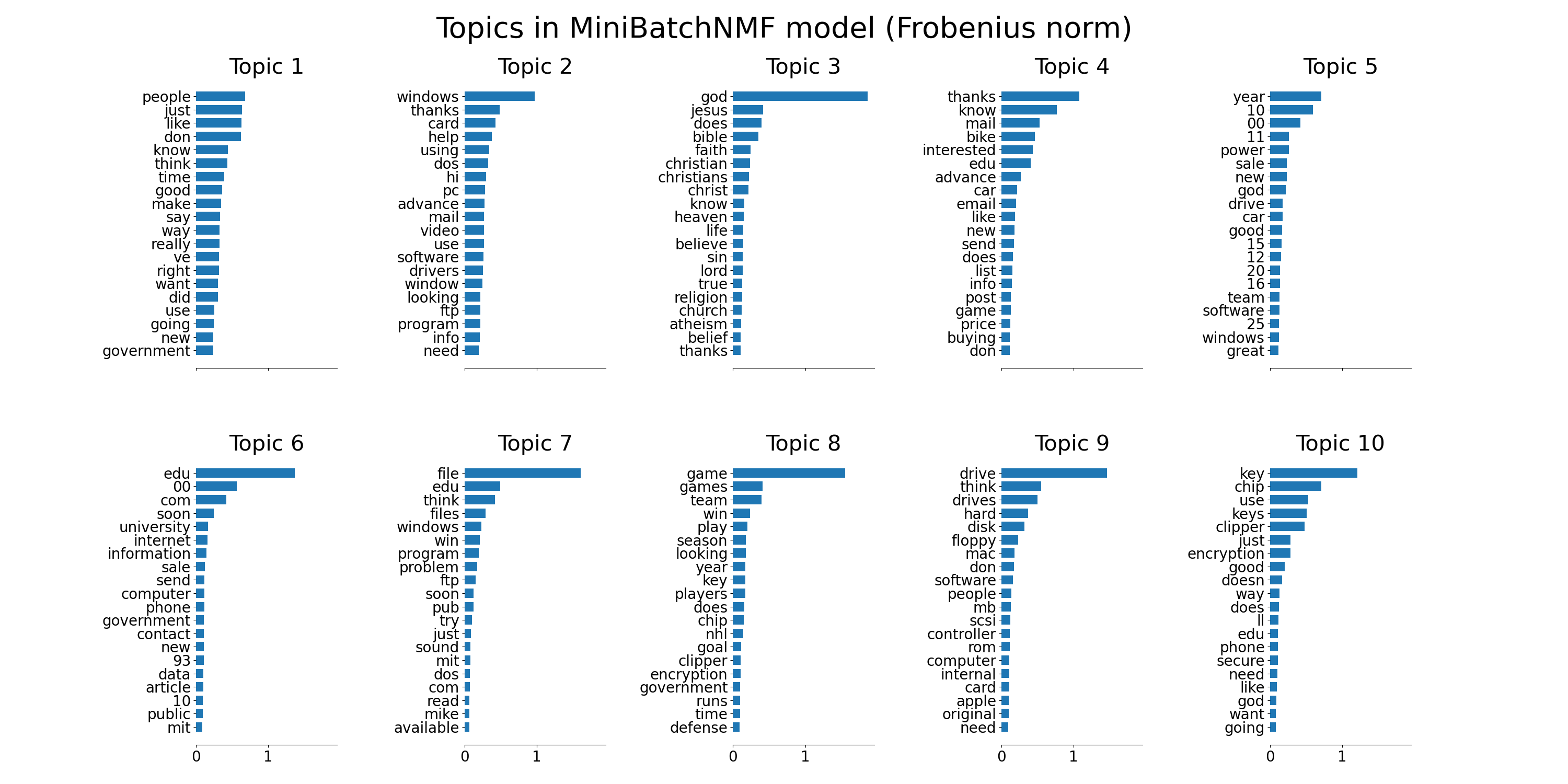

I've covered Text Cleaning in this series already. The objective is to find as munch commonality amongst words, and therefore you want to avoid issues like Run, run, and running being interpreted as a different word. We must clean the text used for Topic Modeling before analysis. Coherence: Higher the topic coherence, the topic is more human interpretable.Perplexity: Lower the perplexity better the model.Two terms you will want to understand when evaluating LDA models are: If you would like to learn more about LDA, check out the paper by Blei, Ng, & Jordan (2003) 1. This post will not get into the details of LDA, but we'll be using the Gensim library to perform the analysis. LDA is potentially the most common method for building topic models in NLP. The topics are a mixture of words, and the algorithm calculates the probability of each word associated with a topic 1. In LDA, the algorithm looks at documents as a mixture of topics. The specific methodology used in this analysis is Latent Dirichlet Allocation (LDA), a probabilistic model for generating groups of words associated with n-topics. We'll approach this as a Product Manager looking for areas to investigate. User forums are a great place to mine for customer feedback and understand areas of interest. It's a collection of about 55,000 entries consisting of Tesla owners asking questions about their cars and the community providing answers. We scraped the data we'll be using for this analysis from the official Tesla User Forums. A subject matter expert can then interpret these to give them additional meaning. It allows you to take unstructured text and bring structure into topics, which are collections of related words. It is a technique for discovering topics in a large collection of documents. Topic Modeling is one of my favorite NLP techniques.

0 kommentar(er)

0 kommentar(er)